Home » Resources » What AI Barbiecore Says About Bias – and Us

What AI Barbiecore Says About Bias – and Us

- November 13, 2023

- Written by cheni vega

The Summer of Barbie ran long…anybody who doubts it need only find some Halloween photos for proof. So it’s only natural that that enthusiasm should have found its way into another of America’s favorite pastimes of 2023: clickbait articles about how AI pictures things.

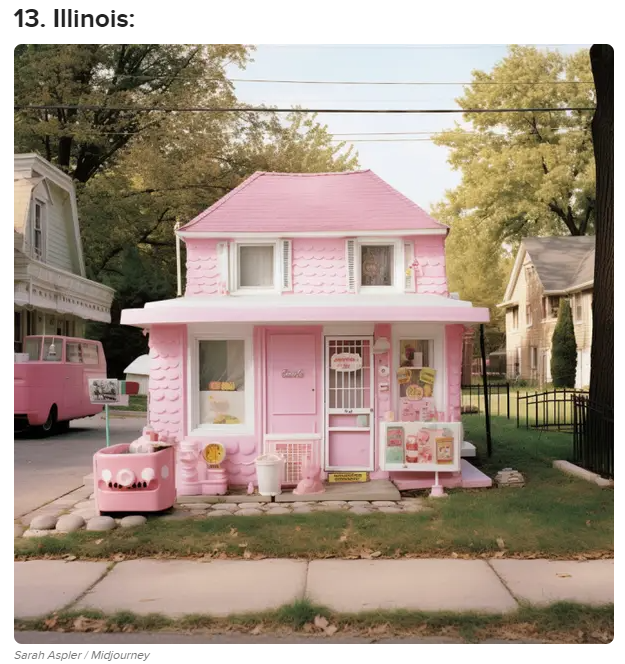

Some of the AI renderings, like what Barbies (and their Dreamhouses!) from each U.S. state or British county would look like, turned out to be fairly anodyne. Others…less so.

In an early stab at one of these, Buzzfeed asked MidJourney, a generative text-to-image AI, to produce images of a Barbie from each of the 194 countries in the world. We’d link the piece but Buzzfeed took it down following a storm of criticism. Why the negative discourse? Many of the images reflected cultural or racist stereotypes; others were simply way off in terms of costume or physical appearance. Most notoriously, the Filipina, Singaporean, and Thai Barbies were all blonde, which may reflect not only Western biases but also colorism, which is a long-standing and serious issue within Asian societies as well as other parts of the world – an important reminder that white, Western biases aren’t the only ones out there.

Where AI Bias Comes From

Given how text-to-image AIs work, we shouldn’t have been surprised at this outcome. Scraping data from the web means that many if not all of online humanity’s biases are scraped as well. The relatively small online presence of Lebanon, for example, relative to that of the United States means that portrayals of Lebanon and Lebanese people are much more likely to reflect American views than Lebanese. Perceptions truly do shape the reality of generative AI, and online those perceptions are disproportionately (although not exclusively!) Western and White.

Of course the scraped data is only the clay that generative AI works with. Prompts are the vision. A loosely-written prompt allows more pre-existing bias to find its way into the AI’s work. A more carefully-written prompt can, in theory, help alleviate this. But there’s the rub – in order to know how to write a prompt that adjusts for bias, we need to understand exactly what and how severe the bias is so that we don’t replace one false image with another. Fortunately, AI bias carries the seeds of its own alleviation…and a potentially rich research vein as well.

Examining and Combatting Group Biases and Perceptions

Using large numbers of unaltered prompts informs us of overall bias in the datasets scraped by generative AI. So far, so good; we can and have been doing so. But we need not simply look at the lowest common denominator (or “general market” in marketing-speak) and make adjustments from there. Rather, we can dig deeper, adjusting our prompts to examine the biases of subcultures and culturally-specific groups of people.

How do Latinos and Black people think about each other? How do they think about Afro-Latinos? Asians? LGBTQ individuals, the community as a whole, and its constituent parts? How do people in these groups think about themselves? Are there differences between sub-groups, or from region to region? Popular beliefs about the answers to these questions, many of which themselves rest upon assumption and projection, basically boil down to stereotypes about stereotypes. The current biases inherent to generative AI allow us the opportunity to test them all.

Implications for Marketers

What does all this mean for inclusivity in commercial communication? Opportunity. By understanding the full scope of group biases, we can paint particular and precise bulls-eyes on those biases in both internal and external branding. This will help brands combat harmful self-perceptions and bridge gaps between disparate groups and subcultures, all while more authentic representation helps them communicate more effectively and meaningfully with target markets. Knowledge is power, and in the case of understanding bias it’s the power to do well by doing good. We’re doubly powerful in what we create in today’s world – not only do we directly shape today’s perceptions and biases, but with AI learning from what we put out there now, we’re already shaping tomorrow’s. Let’s use that power wisely. To learn more about how to use AI in your work contact us today.